Latency is a measure of the delay in the arrival of data, measured in milliseconds (ms). High latency can cause frustrations like high ping in online games, sluggish and unresponsive apps and websites, and stuttering video calls with dropped frames.

Latency differs from speed, bandwidth, and throughput – words sometimes conflated and used interchangeably. Although all three are measured in bits per second, such as megabits per second (Mbps) or gigabits per second (Gbps), there are some key differences:

- Bandwidth is the theoretical limit of how much data can be transferred over a specific amount of time within an ideal networking environment.

- Speed is often used interchangeably with bandwidth, especially by ISPs. It’s not a strict term, however, so speed can refer to various actual or theoretical measurements.

- Throughput describes the actually measured rate of data transfer over a period of time, which includes latency, bandwidth, and other impacting factors.

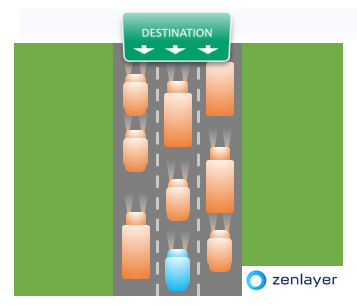

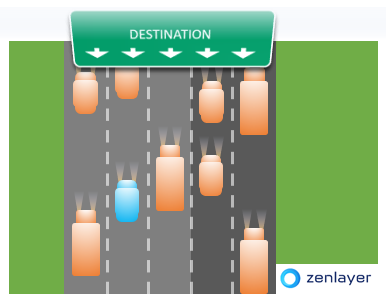

As an example of how these terms relate to one another, imagine internet traffic as real traffic on the freeway. We want to improve throughput to get the blue car to its destination as soon as possible:

The road (network) has a speed limit of 65mph (theoretical speed), but it’s overcrowded from too many cars (data) and not enough lanes (low bandwidth) so everyone’s moving at around 10mph. Instead of getting to its appointment at 3 a.m. as expected, the blue car’s arrival will now be significantly delayed (high latency). This is what happens when you’re transferring data on a network with low bandwidth, resulting in high latency and consequently, low throughput.

If we were to add more lanes (increase bandwidth), more cars (data) can be moved at once so the blue car can now make its appointment with nearly no delays (low latency). Even with the same number of cars from the first example, everyone moves faster (high throughput) because there’s now more room to maneuver around the backed-up lanes and slower cars.

Achieving a high network throughput requires both high bandwidth and low latency. Increasing bandwidth is as easy as upgrading your hardware or internet package, but lowering latency isn’t quite as simple.

Let’s first dive into factors that can contribute to a high latency.

What leads to high latency?

The primary contributors to high latency are distance, hardware, congestion, and content.

1. Distance

When you send a message to someone anywhere in the world, your message must travel through physical wires and cables buried deep within the ground or under the sea. This type of latency is one of the hardest to overcome because digital communications are still bound by the laws of physics. The longer the physical distance that data has to travel, the longer it takes to get there.

Another factor that compounds this type of latency is whether or not the networks carrying your traffic have established peering agreements. If the networks along the way don’t play well with each other, your traffic will be directed through more scenic routes that further add to its delay. Data traversing through a ton of different networks are also more likely to be bottlenecked by compounded inefficiencies across all involved networks.

2. Congestion

From the freeway analogy above, we learned that bandwidth impacts both latency and throughput. A network with high bandwidth allows for more data to be transferred at once. This applies both to your network and that of your destination server.

For instance, if your cap is lower than the server from which you’re receiving traffic, that server will have to slow down to accommodate your congested network, and vice versa. Keep in mind that having too many locally connected devices can also cause congestion from overcrowded communications.

Public networks are another source of congestion, particularly during peak hours. If your network is shared with an entire business building, or if most of your region is subscribed to the same internet service provider (ISP), for example, you’ll likely experience network congestion when everyone is working online during the day.

You have no doubt experienced one prominent effect from congestion – jitter – that happens when your voice or video stutters during a conference call.

3. Hardware

No matter how open the roads are or how high the speed limit, you won’t be getting anywhere anytime soon if your car can’t accelerate above 10mph. The same goes for your hardware.

If your router isn’t equipped to handle traffic efficiently, for example, it won’t be able to quickly process incoming or outgoing data. When traffic comes at a faster rate than your router can handle, data gets queued within a buffer to await processing, causing delays.

Similarly, if your server’s CPU receives higher demand than it can handle, such as a sudden traffic spike, its performance can degrade as it struggles to execute overflowing processes.

4. Content

You might be surprised to find that the content on your website can bump up latency for your visitors. If your website runs off complex code or your pages are filled with plugins, videos, or other content that are too large in file size, users will experience slow load times. That’s pretty bad news, considering Google has found that 53% of mobile visitors will abandon a page that takes more than 3 seconds to load.

Wondering how many people browse on mobile nowadays? Over 90% of the global internet population.

4 ways to reduce latency

Although latency is virtually impossible to eliminate entirely, the good news is that you can take proactive steps to minimize it to give your users faster, smoother, and more responsive digital experiences.

1. Overcome distance limitations

Compute at the edge

As many cross-border businesses today have userbases that are geographically spread across the continents, they’re adopting the strategy of hosting their latency-intensive apps and services at the edge – defined as the edge of the network closest to end users. This strategy helps negate much of the effects of distance-based latency by moving what users are trying to reach to where they are located.

Edge compute servers comes in two primary flavors – bare metal and virtual machines (VMs). Bare metal is a physical server that you’d rent from a service provider in a location that’s close to your users. VM is a virtualized, logical partition of a physical server that’s nearly identical in function to bare metal.

The biggest difference between the two is that when you rent a bare metal server, you’re the only tenant on that machine and retain full control of your instance, whereas a VM might share tenancy with other VMs hosted on the same machine, limiting the scope of your control.

Distribute with a CDN

If your latency issues are multifactorial, as they often are, consider adding a content delivery network (CDN) to your overall solution. A CDN is a group of geographically distributed servers that cache content close to users for quick access.

CDN providers store cached files (such as images or videos) from your origin server in data centers around the world. Content requests are then routed to the data centers nearest to users for quick delivery.

It’s important to note that although they are distributed in the same way as edge compute servers, standard CDN servers are used for storage and lack the flexibility and general compute capabilities of bare metal and virtual machines. In essence, CDN is designed to deliver large amounts of content to your users faster whereas bare metal and virtual machines let you deploy applications closer to your users, easily scale your resources, and can be customized to serve as anything you’d need in terms of compute function.

2. Mitigate network congestion

Go private on a global backbone

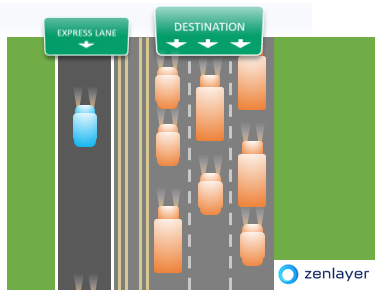

What if, in the traffic example shown at the beginning of this article, instead of adding bandwidth, we moved the blue car onto an express lane?

The blue car is now routed off the busy road and onto a faster, more predictable path away from general congestion.

You can think of a private network as the express lane of the internet. Putting your mission-critical apps on a global private backbone gives your users a direct route to your services unburdened by public network congestion, resulting in improved uptime and user experience.

Hosting through a CDN

In addition to resolving distance-based latency due to their proximity to users as previously mentioned, letting a CDN provider host your oversized images, videos, and other media instead of loading them from your own servers also helps free up bandwidth to reduce congestion in your network.

Buy more bandwidth

Upgrading your internet package is the most straightforward solution if inadequate bandwidth is the primary reason for your network woes. Although you’ll no doubt be paying a higher fee, you’ll also improve the reliability and availability of your applications and services.

3. Keep hardware updated

Upgrade your devices

Paying more for a better plan with a higher bandwidth won’t help if your router is already choking on the current network load. Be sure to continuously check that your devices and their firmware are up to date and rated for the real processing and bandwidth needs of your business to prevent hardware bottlenecks.

Rent your servers

Depending on the size and complexity of the network and number of servers, the costs of upgrades and replacements can be challenging for smaller businesses and startups.

Renting servers from a trusted service provider helps convert a sizable chunk of your business’s capital expenditures (capex) into operating expenses (opex). Both bare metal and VMs touched on above are examples of rented servers that require no heavy upfront investments, and instead give you a more digestible, predictable recurring monthly cost.

When you rent, you won’t have to worry about outdated routers or CPUs because your service provider is 100% responsible for your hardware maintenance and replacements. Not only does this save you from the sticker shock that comes with upgrading and replacing hardware every so often, but it also helps streamline your operations.

Although both are highly scalable, one benefit particular to VMs is that they can accommodate a wider range of use cases due to their ability to be customized down to as small as 1 vCPU / 1 GB RAM and up to virtually anything you need in processing power or RAM. This means that depending on your use case, VMs can be more cost-effective than renting a full server.

4. Optimize web content

Reduce file sizes and dimensions

Videos and images are among the greatest consumers of bandwidth on a web page due to their file size. Compressing your media before putting them on your website (while ensuring they remain crisp and legible) will give your visitors a smoother browsing experience.

If your files are still too large even after compression, consider cropping images to display only the most important features and trimming the non-essential parts of videos. Keep in mind that scaling your media to a reasonable dimension can also make a big difference – does that picture of a happy puppy need to be 3840×2160, or would it serve the same function at 1280×720?

Clean up codes, scripts, and plugins

When a website’s code gets too long and messy, particularly if you rely on website builders and use a ton of plugins, your visitors will experience protracted load times that can drastically bump up your bounce rate. Similarly, too many CSS and JavaScript elements on your site can greatly increase latency for new visitors as their browser attempts to load all the files (and media!) at once.

Enabling browser caching can alleviate some of the latency issues for returning visitors, but to truly improve experience for all users, consider cleaning up your code and removing any resource-heavy plugins. Using cleaning tools like a code minifier can help further streamline your page and improve page load time by stripping the whitespaces, comments, and other non-critical snippets from your code.

Minimize latency with Zenlayer today!

Although latency is unavoidable, Zenlayer’s advanced suite of compute and networking services can help your business minimize it. Our global network of 290+ points of presence (PoPs) and 5400+ peers around the world let you bring your apps and services close to your users for silky smooth digital experiences that turn them into happy repeat customers.

If you’re looking to decrease latency and increase your revenue, be sure to talk to a solution expert today!